Robots.txt: Understanding Its Role and Impact on SEO

When it comes to search engine optimization (SEO), there are many technical aspects to consider. One such aspect is the robots.txt file. Although it may seem complex, understanding how robots.txt works and its impact on SEO is crucial for website owners and digital marketers. We will delve into the role of robots.txt, its importance, and how it can affect your website’s search engine rankings.

It’s time to enroll yourself in the Best Digital Marketing institute in Bangalore

What is Robots.txt?

Robots.txt is a text file that resides in the root directory of a website. It serves as a communication tool between website owners and web crawlers, telling search engine bots which parts of a website should be crawled and indexed and which parts should be ignored. The robots.txt file uses a set of directives to provide instructions to search engine robots or bots.

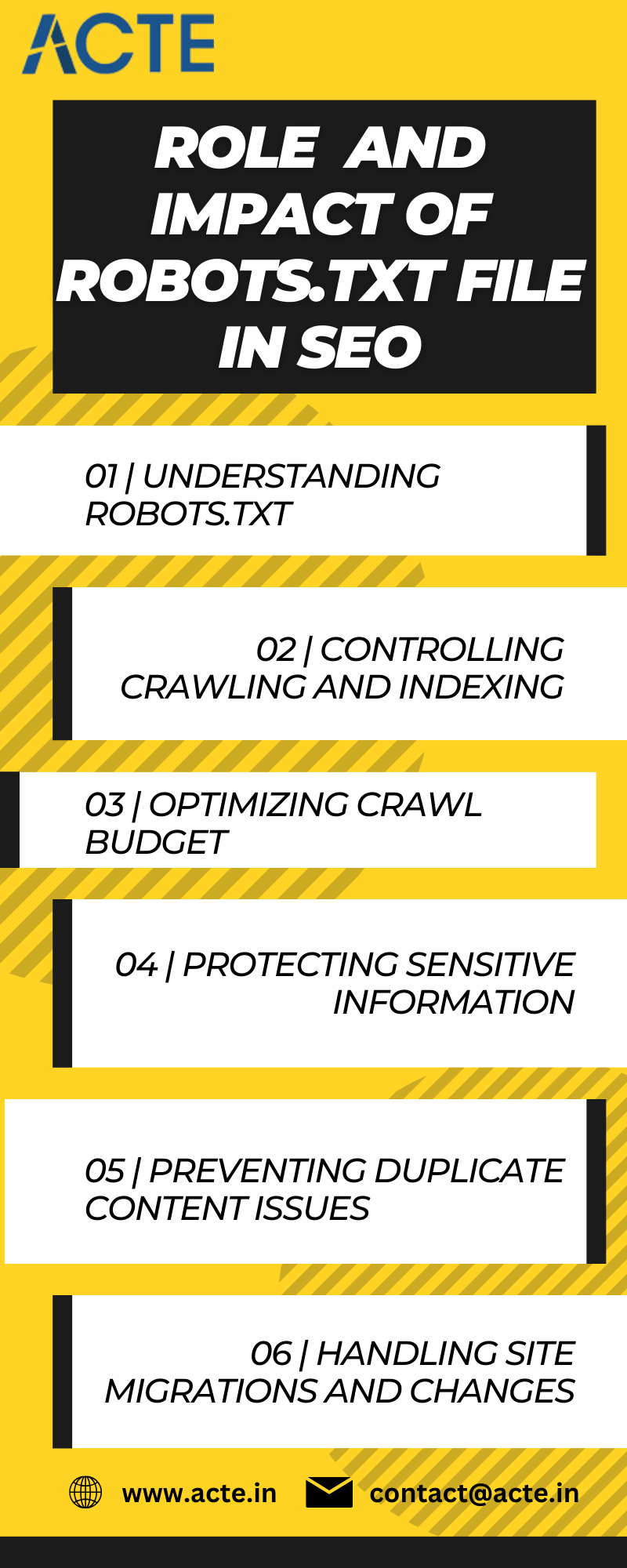

Why is Robots.txt Important for SEO?

The robots.txt file plays a vital role in SEO for the following reasons:

- Controlling Crawling and Indexing:

By using robots.txt directives, website owners can guide search engine bots on which portions of their website to crawl and index. This can be useful for excluding sensitive or duplicate content, preventing indexing of certain pages, or prioritizing important sections. - Preserving Crawl Budget:

Search engines allocate a limited crawl budget to each website, determining how frequently and deeply they crawl its pages. Robots.txt allows you to prioritize important pages and prevent search engines from wasting crawl budget on less valuable sections, ensuring that they focus on your most critical content. - Protecting Sensitive Information:

Robots.txt can be used to prevent search engines from indexing private or confidential information on your website, such as login pages, admin sections, or internal databases. This helps maintain the security and privacy of your website.

Understanding Robots.txt Directives:

Robots.txt uses directives or commands to communicate with search engine bots. Here are some commonly used directives:

- User-agent:

This directive specifies the search engine bot to which the following rules apply. For example, “User-agent: Googlebot” targets Google’s crawler, while “User-agent: * “ applies to all bots. - Disallow:

The “Disallow” directive indicates the parts of the website that should not be crawled or indexed. For instance, “Disallow: /private” tells search engines to avoid crawling the “/private” directory. - Allow:

The “Allow” directive specifies exceptions to the “Disallow” rule. It allows specific parts of the website to be crawled and indexed, even if the parent directory is disallowed. - Sitemap:

The “Sitemap” directive informs search engines about the location of your website’s XML sitemap. Including this directive helps search engines discover and index your website’s pages more efficiently.

Best Practices for Using Robots.txt:

To optimize the impact of robots.txt on your website’s SEO, follow these best practices:

- Use a Valid Syntax:

Ensure that your robots.txt file adheres to the correct syntax. Even a small error can lead to unintended consequences, such as blocking search engine bots from crawling your entire website. - Test and Verify:

After creating or modifying your robots.txt file, use the Google Search Console or other webmaster tools to test and verify its effectiveness. This allows you to identify any issues or unintended consequences before they impact your search rankings. - Be Transparent:

Avoid using robots.txt to conceal pages that should be publicly accessible. Search engines prioritize user experience, and hiding valuable content may have a negative impact on your rankings. - Combine with Meta Robots Tags:

Robots.txt works in conjunction with meta robots tags. While robots.txt controls crawling and indexing at a broader level, meta robots tags provide more granular control on individual pages. Use both together to optimize your website’s indexing.

Conclusion:

Understanding the role of robots.txt is essential for effective SEO. By properly utilizing robots.txt directives, you can guide search engine bots, control crawling and indexing, preserve crawl budget, and protect sensitive information.

Remember to follow best practices, test and verify your robots.txt file, and combine it with meta robots tags for optimal results. With a well-optimized robots.txt, you can enhance your website’s visibility, improve search engine rankings, and provide a better user experience.

Comments

Post a Comment