Unlocking Data Potential: A Guide to AWS Data Engineering

Data engineering is an essential field that focuses on the design, development, and management of systems responsible for collecting, storing, and analyzing data. In the AWS (Amazon Web Services) ecosystem, data engineering leverages a robust array of cloud services to help organizations fully realize their data potential.

If you want to excel in this career path, then it is recommended that you upgrade your skills and knowledge regularly with the latest AWS Training in Chennai.

Understanding AWS Data Engineering

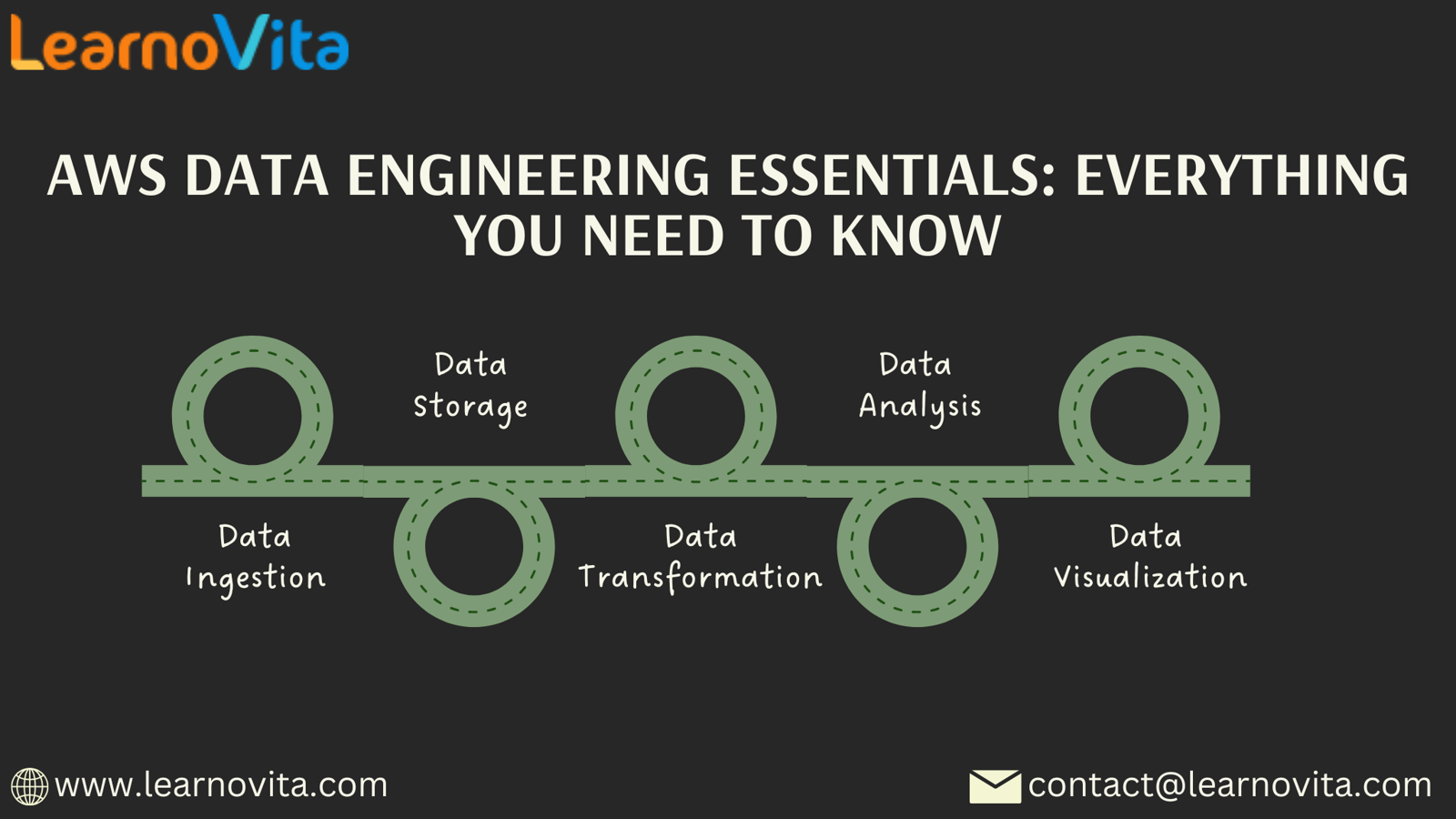

AWS Data Engineering involves a variety of methods and practices used to manage and process data through AWS offerings. Key activities include:

- Data Ingestion: Acquiring data from diverse sources such as databases, APIs, and streaming services.

- Data Storage: Selecting the right solutions for managing large datasets effectively.

- Data Transformation: Cleaning and reshaping data into a usable format.

- Data Analysis: Extracting meaningful insights to facilitate decision-making.

- Data Visualization: Presenting insights visually to aid understanding among stakeholders.

Key AWS Services for Data Engineering

AWS offers a wide range of services tailored for data engineering tasks. Here are some of the most significant tools available:

- Amazon S3 (Simple Storage Service): A scalable object storage service for straightforward data storage and retrieval.

- AWS Glue: A fully managed ETL (Extract, Transform, Load) service that simplifies data preparation.

- Amazon Redshift: A high-performance data warehousing service optimized for fast querying and analysis.

- Amazon Kinesis: A platform built for real-time data streaming and processing.

- AWS Lambda: A serverless compute service that executes code in response to events, ideal for various data processing tasks.

- Amazon EMR (Elastic MapReduce): A managed Hadoop framework designed for efficient large-scale data processing.

Creating an Effective Data Engineering Pipeline on AWS

Step 1: Data Ingestion

The initial phase in establishing a data engineering pipeline involves ingesting data from various sources. This can be done through batch processing (e.g., overnight imports) or real-time streaming (e.g., processing logs or transactions as they happen).

Step 2: Data Storage

After ingestion, it is crucial to store the data appropriately. Depending on your requirements, consider leveraging:

- Amazon S3 for raw data storage.

- Amazon RDS for structured data management using SQL.

- Amazon DynamoDB for flexible NoSQL database solutions.

Step 3: Data Transformation

Raw data often requires cleaning and transformation before it can be effectively analyzed. AWS Glue automates much of this process, enabling you to configure ETL jobs to manage various data types.

Step 4: Data Analysis

Once the data has been transformed, it becomes ready for analysis. AWS provides several tools, including Amazon Redshift for data warehousing and Amazon Athena for SQL queries on data stored in S3.

Step 5: Data Visualization

Finally, insights generated from data analysis should be effectively visualized. Tools like Amazon QuickSight enable you to present data findings in a clear and accessible format, making it easier for stakeholders to make informed decisions.

Best Practices for Effective AWS Data Engineering

- Utilize Serverless Solutions: Take advantage of AWS Lambda and other serverless services to optimize costs and resource management.

- Focus on Security: Ensure that data is encrypted both during transit and at rest; utilize AWS IAM for controlled access to data and services.

- Monitor and Optimize Performance: Leverage AWS CloudWatch for performance tracking and to refine your data pipelines as necessary.

Conclusion

AWS Data Engineering is critical for organizations striving to leverage their data strategically. By taking full advantage of the extensive services AWS offers, businesses can design scalable, efficient, and secure data pipelines that yield actionable insights.

Comments

Post a Comment